DGST: a Dual-Generator Network for Text Style Transfer

Xiao Li, Guanyi Chen, Chenghua Lin, Ruizhe Li

NLP Applications Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

We propose DGST, a novel and simple Dual-Generator network architecture for text Style Transfer. Our model employs two generators only, and does not rely on any discriminators or parallel corpus for training. Both quantitative and qualitative experiments on the Yelp and IMDb datasets show that our model gives competitive performance compared to several strong baselines with more complicated architecture designs.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

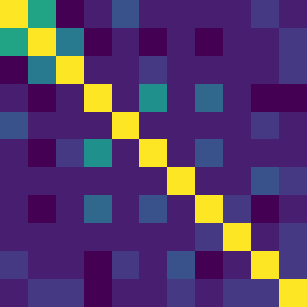

Connected Papers in EMNLP2020

Similar Papers

Text Segmentation by Cross Segment Attention

Michal Lukasik, Boris Dadachev, Kishore Papineni, Gonçalo Simões,

Monolingual Adapters for Zero-Shot Neural Machine Translation

Jerin Philip, Alexandre Berard, Matthias Gallé, Laurent Besacier,

Reformulating Unsupervised Style Transfer as Paraphrase Generation

Kalpesh Krishna, John Wieting, Mohit Iyyer,