Semantic Evaluation for Text-to-SQL with Distilled Test Suites

Ruiqi Zhong, Tao Yu, Dan Klein

Semantics: Sentence-level Semantics, Textual Inference and Other areas Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

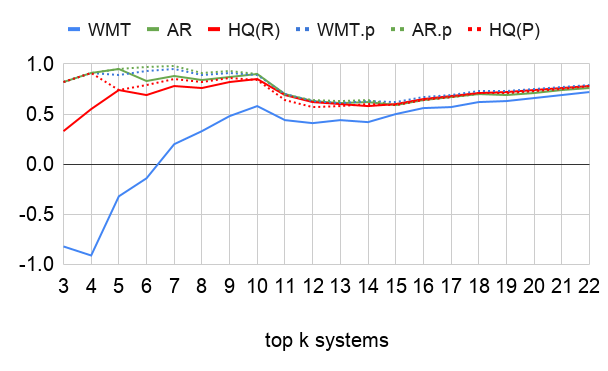

We propose test suite accuracy to approximate semantic accuracy for Text-to-SQL models. Our method distills a small test suite of databases that achieves high code coverage for the gold query from a large number of randomly generated databases. At evaluation time, it computes the denotation accuracy of the predicted queries on the distilled test suite, hence calculating a tight upper-bound for semantic accuracy efficiently. We use our proposed method to evaluate 21 models submitted to the Spider leader board and manually verify that our method is always correct on 100 examples. In contrast, the current Spider metric leads to a 2.5% false negative rate on average and 8.1% in the worst case, indicating that test suite accuracy is needed. Our implementation, along with distilled test suites for eleven Text-to-SQL datasets, is publicly available.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

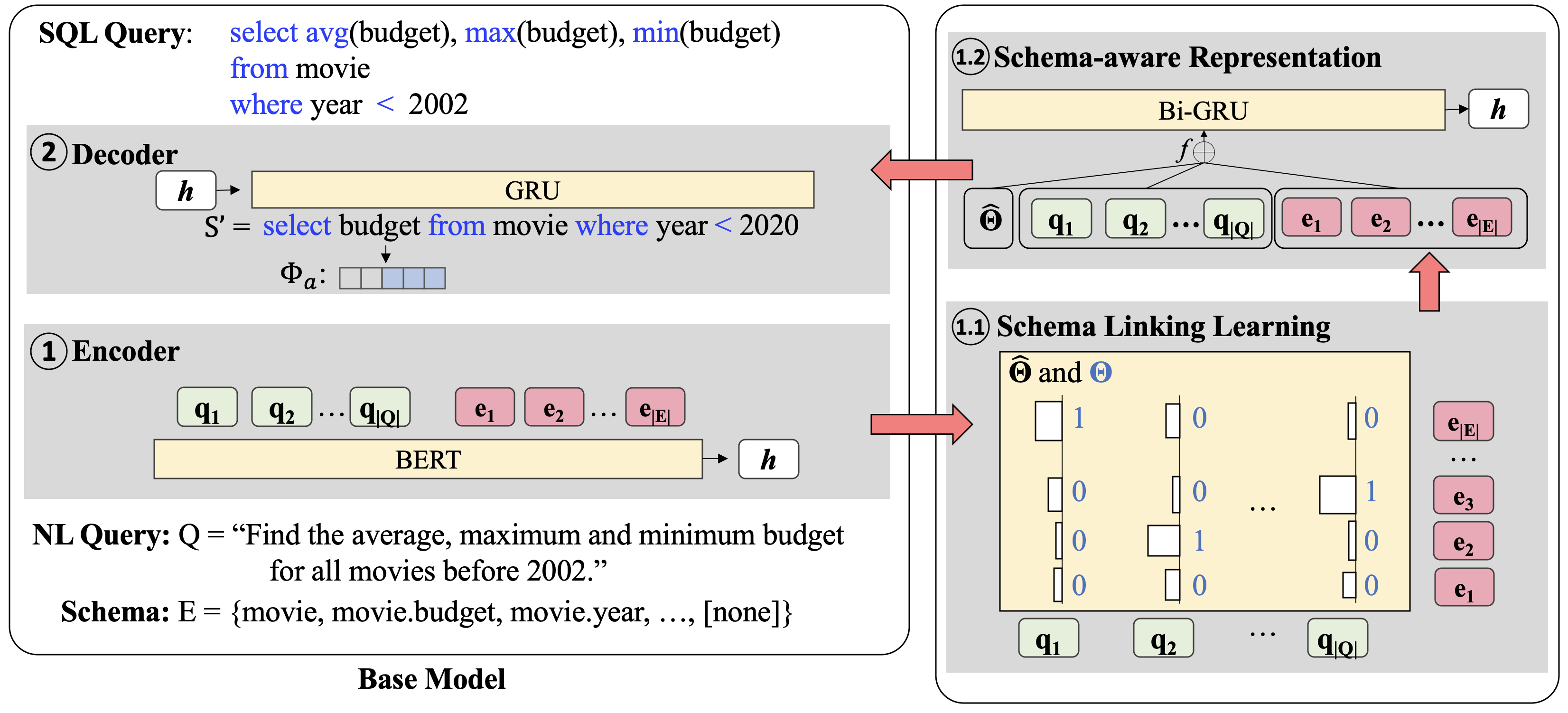

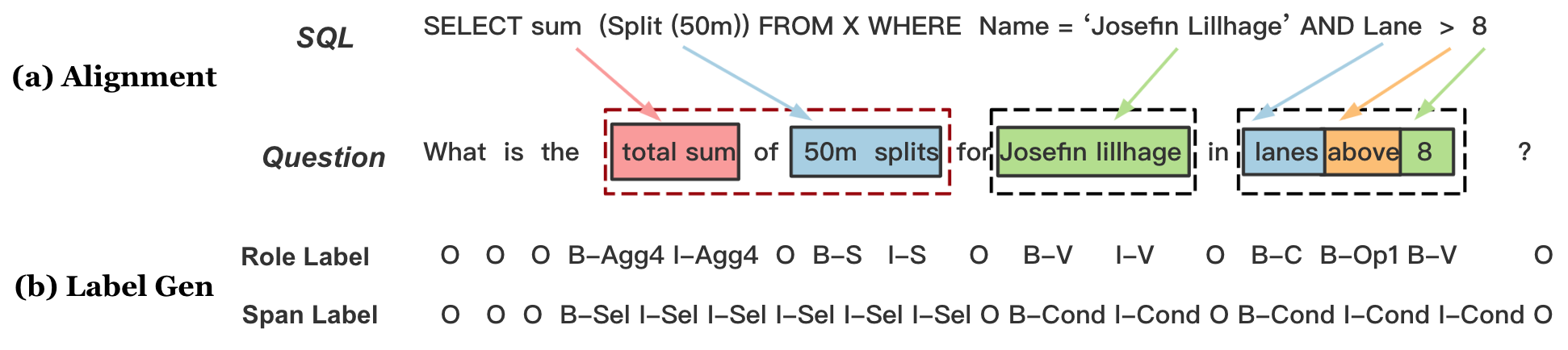

Mention Extraction and Linking for SQL Query Generation

Jianqiang Ma, Zeyu Yan, Shuai Pang, Yang Zhang, Jianping Shen,

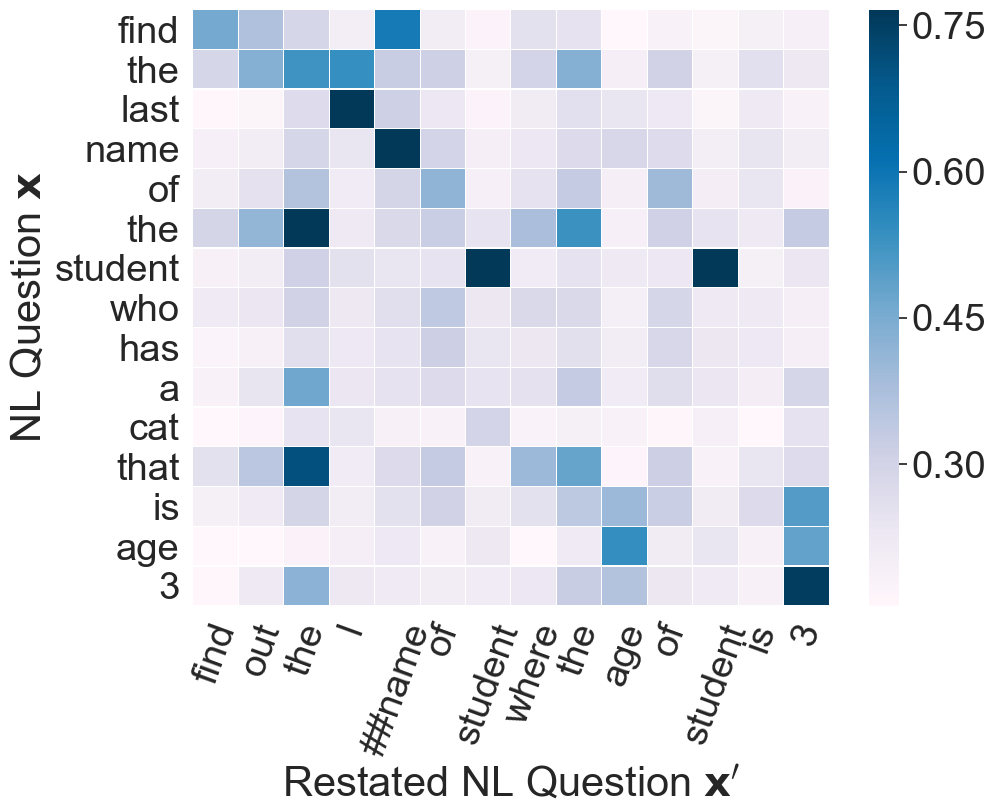

“What Do You Mean by That?” A Parser-Independent Interactive Approach for Enhancing Text-to-SQL

Yuntao Li, Bei Chen, Qian Liu, Yan Gao, Jian-Guang Lou, Yan Zhang, Dongmei Zhang,

Re-examining the Role of Schema Linking in Text-to-SQL

Wenqiang Lei, Weixin Wang, Zhixin Ma, Tian Gan, Wei Lu, Min-Yen Kan, Tat-Seng Chua,