The Curse of Performance Instability in Analysis Datasets: Consequences, Source, and Suggestions

Xiang Zhou, Yixin Nie, Hao Tan, Mohit Bansal

Semantics: Sentence-level Semantics, Textual Inference and Other areas Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We find that the performance of state-of-the-art models on Natural Language Inference (NLI) and Reading Comprehension (RC) analysis/stress sets can be highly unstable. This raises three questions: (1) How will the instability affect the reliability of the conclusions drawn based on these analysis sets? (2) Where does this instability come from? (3) How should we handle this instability and what are some potential solutions? For the first question, we conduct a thorough empirical study over analysis sets and find that in addition to the unstable final performance, the instability exists all along the training curve. We also observe lower-than-expected correlations between the analysis validation set and standard validation set, questioning the effectiveness of the current model-selection routine. Next, to answer the second question, we give both theoretical explanations and empirical evidence regarding the source of the instability, demonstrating that the instability mainly comes from high inter-example correlations within analysis sets. Finally, for the third question, we discuss an initial attempt to mitigate the instability and suggest guidelines for future work such as reporting the decomposed variance for more interpretable results and fair comparison across models.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

With Little Power Comes Great Responsibility

Dallas Card, Peter Henderson, Urvashi Khandelwal, Robin Jia, Kyle Mahowald, Dan Jurafsky,

Learning from Context or Names? An Empirical Study on Neural Relation Extraction

Hao Peng, Tianyu Gao, Xu Han, Yankai Lin, Peng Li, Zhiyuan Liu, Maosong Sun, Jie Zhou,

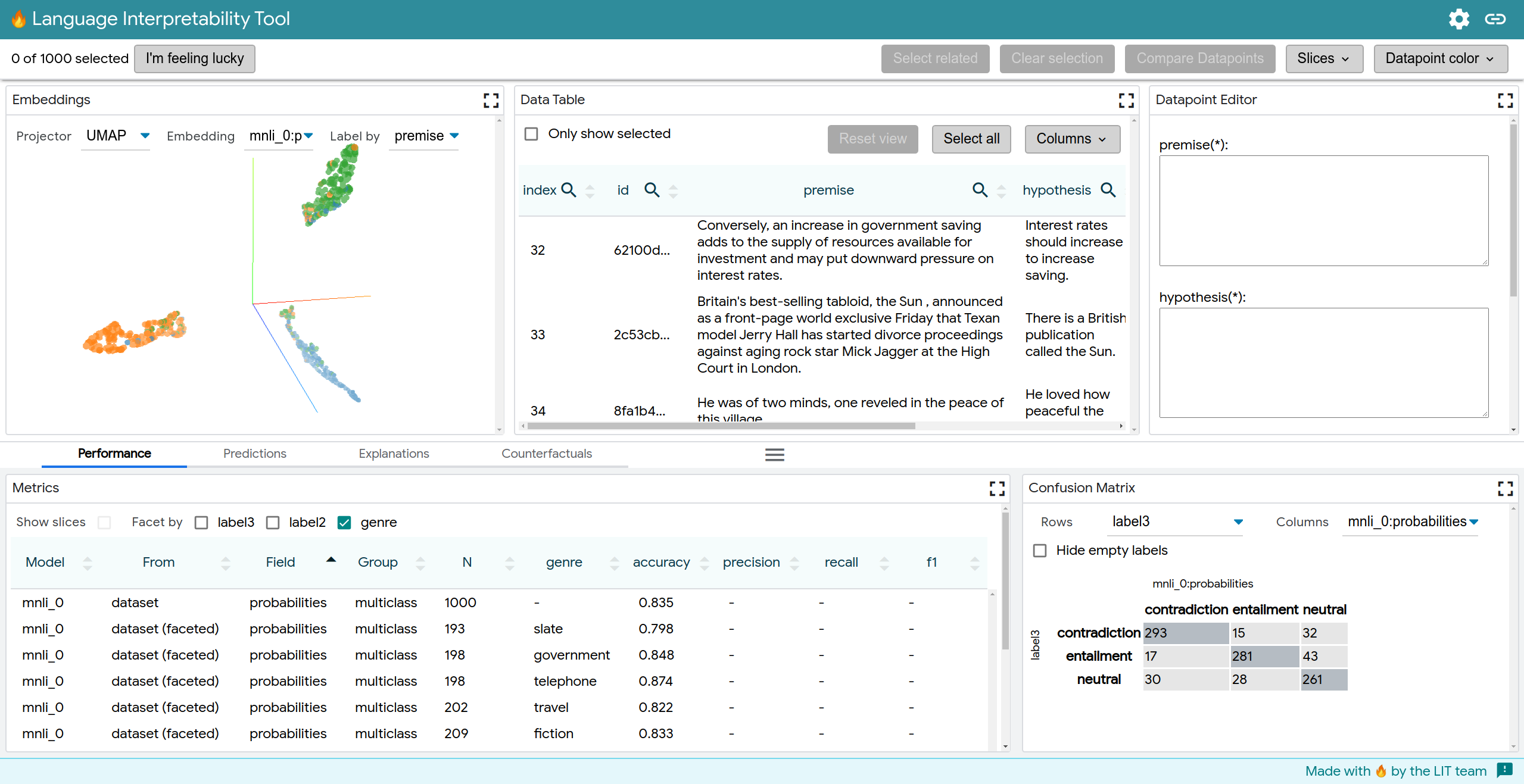

The Language Interpretability Tool: Extensible, Interactive Visualizations and Analysis for NLP Models

Ian Tenney, James Wexler, Jasmijn Bastings, Tolga Bolukbasi, Andy Coenen, Sebastian Gehrmann, Ellen Jiang, Mahima Pushkarna, Carey Radebaugh, Emily Reif, Ann Yuan,

Exposing Shallow Heuristics of Relation Extraction Models with Challenge Data

Shachar Rosenman, Alon Jacovi, Yoav Goldberg,