Incorporating a Local Translation Mechanism into Non-autoregressive Translation

Xiang Kong, Zhisong Zhang, Eduard Hovy

Machine Translation and Multilinguality Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

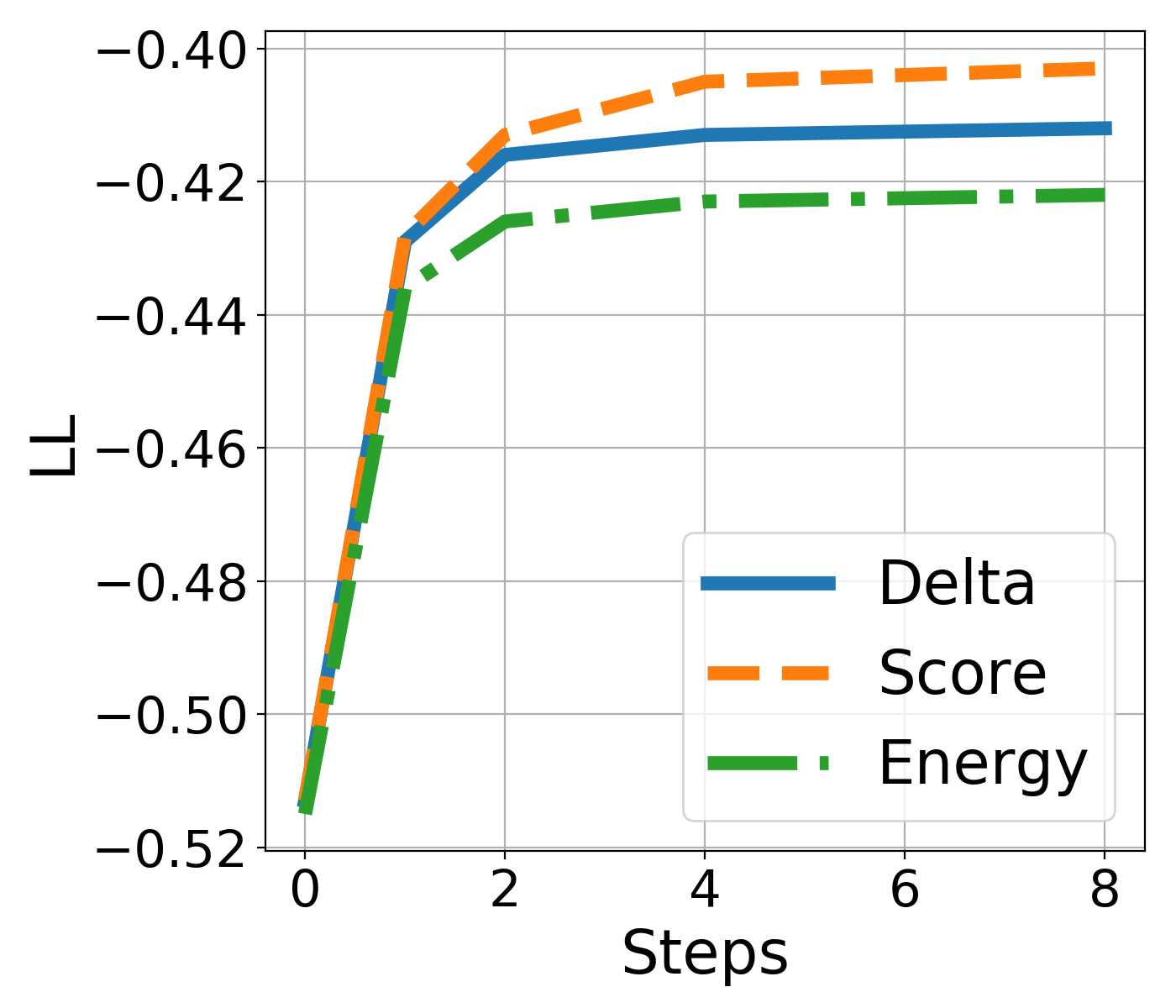

In this work, we introduce a novel local autoregressive translation (LAT) mechanism into non-autoregressive translation (NAT) models so as to capture local dependencies among target outputs. Specifically, for each target decoding position, instead of only one token, we predict a short sequence of tokens in an autoregressive way. We further design an efficient merging algorithm to align and merge the output pieces into one final output sequence. We integrate LAT into the conditional masked language model (CMLM) (Ghazvininejad et al.,2019) and similarly adopt iterative decoding. Empirical results on five translation tasks show that compared with CMLM, our method achieves comparable or better performance with fewer decoding iterations, bringing a 2.5x speedup. Further analysis indicates that our method reduces repeated translations and performs better at longer sentences. Our code will be released to the public.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Consistent Transcription and Translation of Speech

Matthias Sperber, Hendra Setiawan, Christian Gollan, Udhay Nallasamy, Matthias Paulik,

Simultaneous Machine Translation with Visual Context

Ozan Caglayan, Julia Ive, Veneta Haralampieva, Pranava Madhyastha, Loïc Barrault, Lucia Specia,

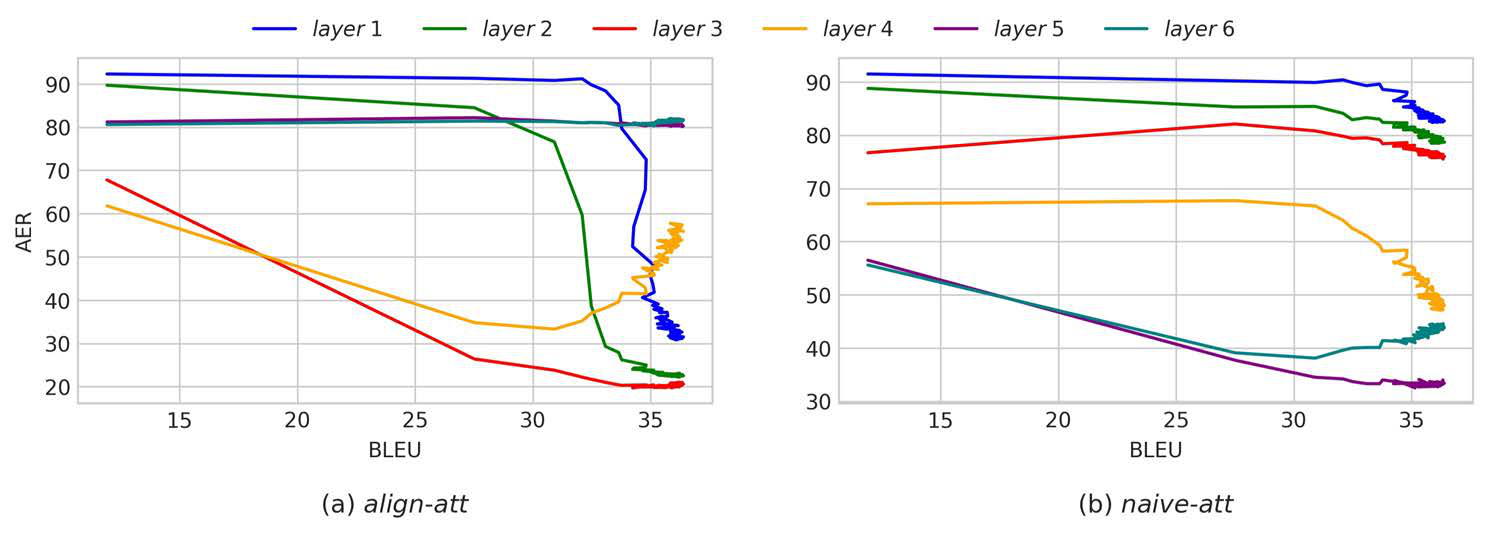

Accurate Word Alignment Induction from Neural Machine Translation

Yun Chen, Yang Liu, Guanhua Chen, Xin Jiang, Qun Liu,

Iterative Refinement in the Continuous Space for Non-Autoregressive Neural Machine Translation

Jason Lee, Raphael Shu, Kyunghyun Cho,